Since ancient times, humans have been crafting instruments to make their life easier. Craftsmen from around the world were renowned for their skills. Items such as shining armours, hand-crafted swords and sparkling crown jewels were considered a luxury to those who could afford them. But the 1700s saw the Industrial Revolution across Europe and its colonies. Increase in demand forced the industrialists to look for a faster alternative. And it is here that the first turning machine was built. The reason behind it was simple – To increase the precision while producing items at a faster rate.

In order to understand how the current generation of CNC machines came into being, it is critical to understand how its predecessors laid the foundation. The earliest form of machines used cams, which controlled the machine tool. But this was still an early form of automation, and required human presence to obtain information from an abstract level. Although punch cards had been invented by the early 18th century and developed by 19th century, it would be a long time before they were assimilated into the machining industry.

The next obstacle that industrialists faced was the tolerances which were required for machining. Complete automation required machines to have tolerances in the order of a thousandth of an inch. And the answer lay in the servomotors. By combining two servomotors to form a synchro, a closed-loop system was formed which would aid in overcoming this obstacle.

The credit for inventing the earliest version of an NC machine or the numerical control machine is held by John T Parsons and Frank L Stulen. While working on production of helicopter blades, Stulen applied the idea of punch cards into numerical machine, where one operator read out the numbers from the punch card, while two others operated the X and Y-axes of the machine. While Stulen used the idea of punch card, Parsons expanded the idea to include more points on the punch card, thereby consolidating information into one single sheet.

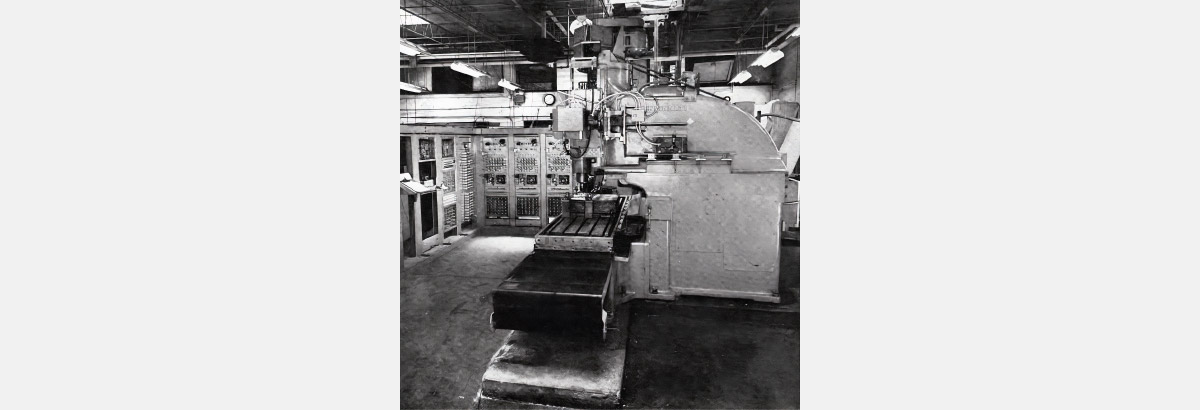

This idea was further developed by a team at MIT, with the punch card using 7-track punch tape instead of the normal 1-track. They also added a digital to analog converter which would increase the power which the motors received, thereby moving the controls faster. Although successful, this model proved to be extremely complex in nature, requiring multiple vacuum tubes, relays and several moving parts, thus making it expensive to build and maintain.

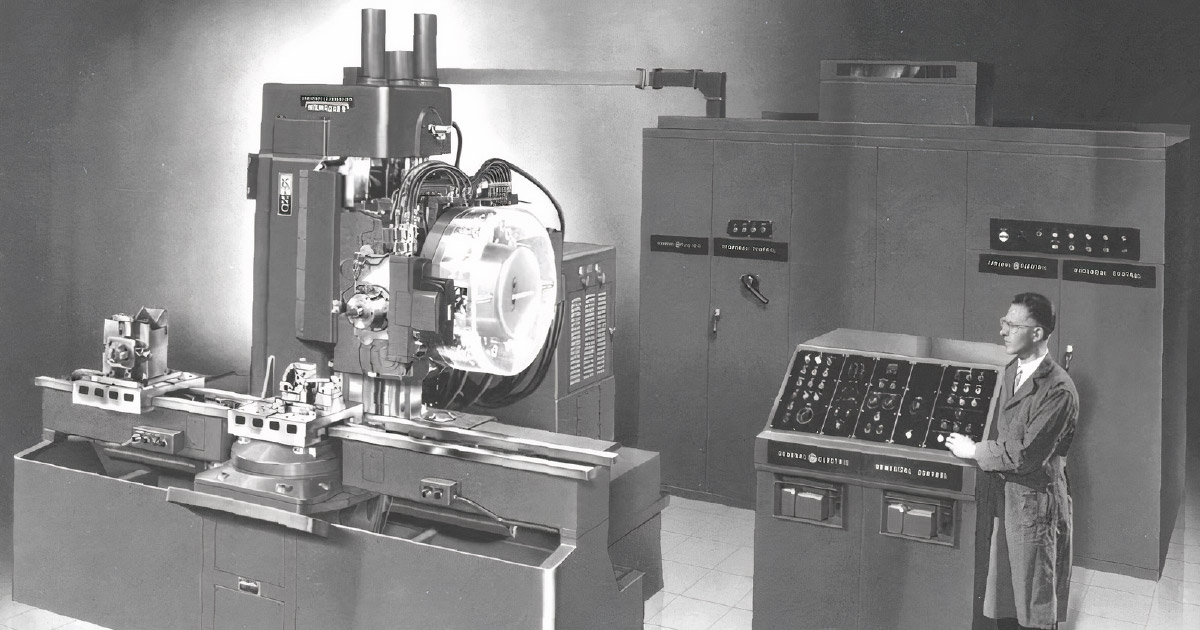

It was in the year 1952, when the first commercially available numerically controlled machine was announced. It was built by Arma Corporation, and was a numerically controlled lathe machine.

The 1960s and 1970s saw the rise of computers. While the process for machining had been automated, the data and commands were still programmed by human operators in order to produce the punch tapes to be used as inputs. With programming languages leapfrogging and gaining traction in the automation industry, the NC industry also saw the induction of programming languages into the machining industry.

In the late 1950s, the first ever programming language for numerical control machine was developed, and by mid-1960s, it was being used commercially. And this marked the arrival of computer numerical control machine, or the CNC machine.

While Parsons and MIT were coming up with the machine that would revolutionise the manufacturing industry, General Motors was working on a way to digitize and store its various designs. The experimental project led to the creation of the DAC-1 project in collaboration with IBM, which was the first instance of digitizing the design phase.

The DAC-1 converts the 2-D drawings into 3-D models, which would then be converted into APT commands, which would then be used to cut on the machine. At the same time, Lincoln Labs at MIT had been successful in building their own software Sketchpad, designed by Ivan Sunterland. This would further for the basis for the EDM or the Electronic Drafting Machine, which would become the part of the first end-to-end CAD/CNC production system used by Lockheed.

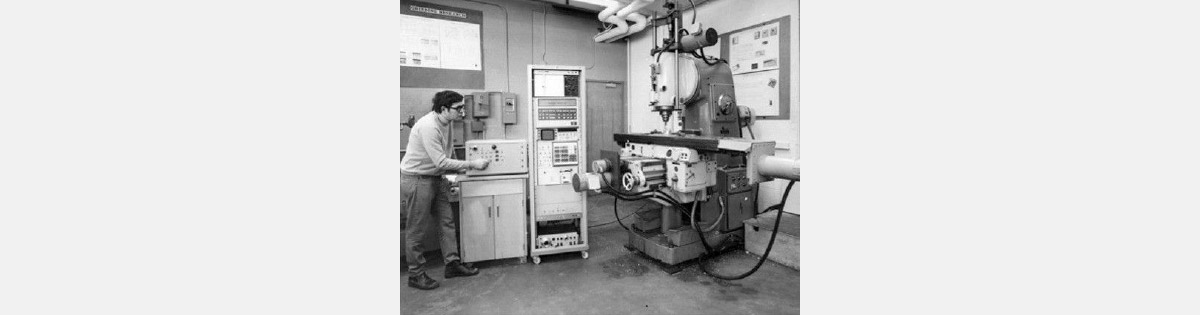

With microcomputers being introduced in the 1970s, handling the CNC machines using servo systems became expensive. Each machine had a dedicated small computer, which further simplified the process by placing the entire manufacturing process in one machine. The introduction of microprocessors further reduced the implementation costs. As a result, producing complex shapes became easier, and the setup found widespread use in the manufacturing industry.

Further developments in the computer science domain led to introduction of personal computers. Introduction of softwares enabled people with technical knowledge and avv, which was soon commercialized.

With this meteoritic rise of CNC, it was not long before different various different programming languages were developed by different people. The most famous of these languages is the G-code, which is still being used in various CNC machines.

In 2001, MIT inaugurated the Centre for Bits and Atmos Lab, headed by Neil Greshenfeld. One of the projects born from this lab was the Machines That Make, where the desktop CNC was built by Jonathan Ward, Nadya Peek, and David Mellis in the year 2011. It was a 3-axis mill which ran on Aurdino microcontroller and couldprecisely mill various materials, while fitting on a desk and being portable.

From a room-size machine being run by operators, to automated desktop size machines, the CNC machine has progressed rapidly in the past century. The introduction of the NC machine was said to be the start of the second industrial revolution, where automation would slowly take over the industry. And a century later, CNC forms an integral cog in the machining industry. Further advancements may look in the direction of incorporating AI or artificial intelligence into manufacturing. But as of now, the powerto create remains in human hands.